What is MCP? The Universal Connector for AI Explained

TL;DR Abstract

The Model Context Protocol (MCP), an open standard introduced by Anthropic, is transforming software development by providing a universal interface for AI models to securely connect with external tools and data. It addresses the inefficiencies of custom integrations by enabling AI assistants to access real-time context—such as code, documents, and APIs—through a standardized client-server architecture. MCP significantly reduces AI hallucinations and expands AI utility, facilitating automation in coding, documentation, testing, and CI/CD workflows. With rapid adoption by major industry players like OpenAI, Microsoft, and Google DeepMind, MCP is establishing itself as a foundational standard for interoperable and "agentic" AI.

What Exactly Is the Model Context Protocol (MCP)?

At its core, MCP is an open protocol introduced by Anthropic in late 2024. Its fundamental purpose is to enable AI models to securely connect to external resources in a standardized way. Before MCP, integrating an AI model with each distinct data source or tool required a custom, one-off job – a complex and unsustainable engineering challenge often dubbed the "M x N problem". MCP solves this by acting as a universal adapter, defining a common language (built on JSON-RPC 2.0) that LLMs can use to request data or trigger actions from any external service.

In non-technical terms, MCP allows an AI to "ask" different tools for help as easily as you'd plug in a USB device. An AI could say "open this file" or "run this query," and any compatible tool (like a file system, database, or API) would understand and respond. This means AI assistants are no longer confined to their training data; they can reach into code repositories, documentation, testing frameworks, and cloud services in real time, all through a single, consistent protocol.

How Does MCP Work? Architecture in Plain Language

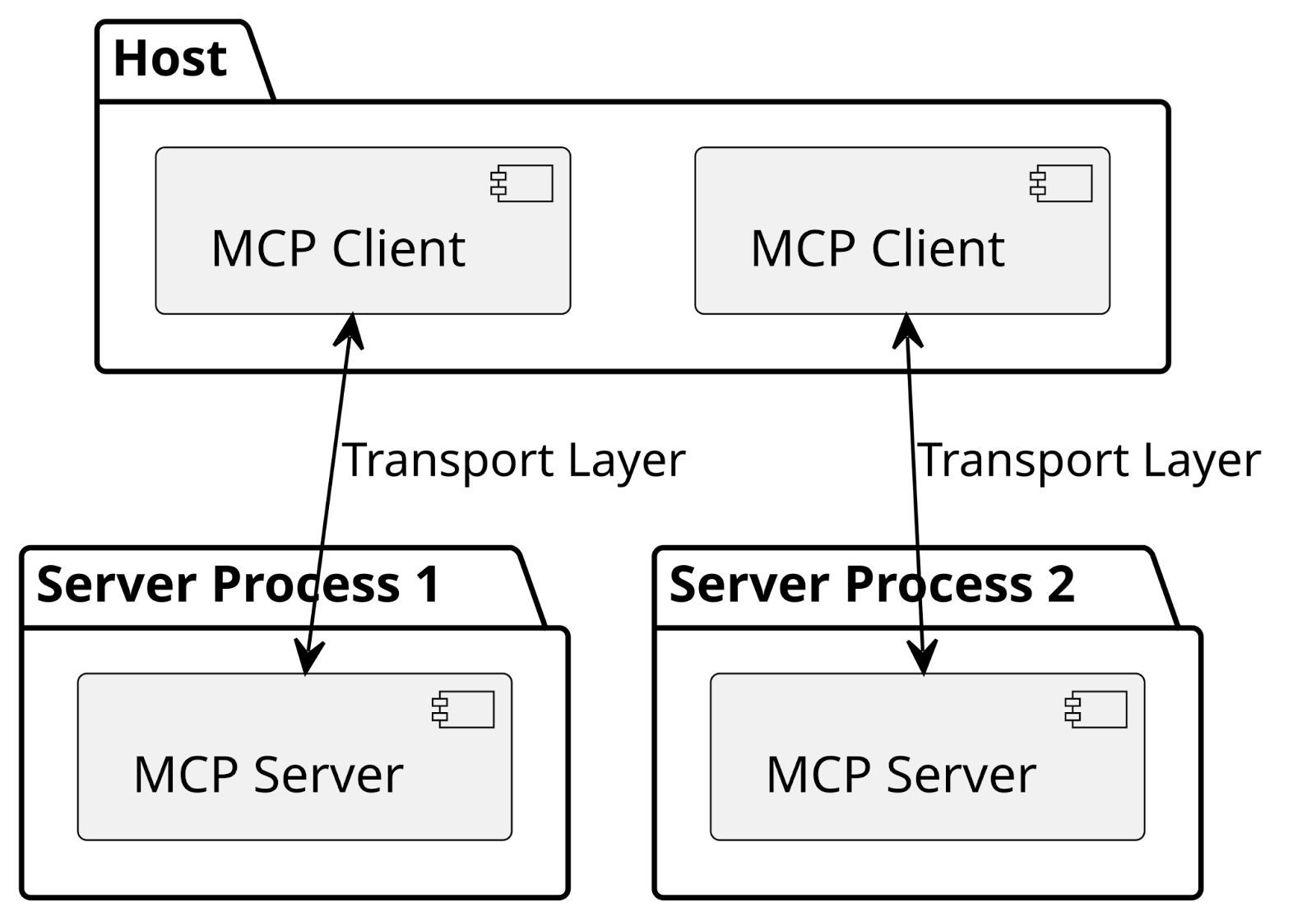

MCP operates on a modular, client-server architecture, conceptually similar to the Language Server Protocol (LSP) which standardized communication between code editors and programming languages.

- The MCP Host: This is the user-facing AI application or environment, such as a conversational AI, an AI-powered IDE (like VS Code or Cursor), or a custom agent system. The host contains the LLM and manages its interactions with external resources.

- The MCP Client: Located within the MCP host, the client translates the LLM's natural language requests into the structured MCP format. It discovers available MCP servers and orchestrates the two-way flow of information between the LLM and those servers.

- The MCP Server: This is the external gateway that exposes specific data, tools, or capabilities to the AI system. Each server is typically a lightweight, standalone component dedicated to a single integration point, such as a PostgreSQL database, a GitHub repository, or a Slack instance.

MCP servers expose their capabilities through three fundamental types of primitives:

- Resources: Used for read-only information retrieval from databases or data sources, like fetching a stock price or reading a file's content.

- Tools: Functions that perform an action with a "side effect" on an external system, such as making an API call, running a calculation, or writing data to a database. This is what enables AI to draft an email or deploy an application.

- Prompts: Reusable templates and workflows that standardize common LLM-server communication patterns.

It's important to note that MCP complements existing agent frameworks like LangChain; it standardizes the "hands" of an AI system, allowing it to interact with the world, rather than handling the "brain" or high-level orchestration.

Why Should You Care? The Benefits and Impact of MCP

MCP isn't just a technical curiosity; it represents a strategic shift that makes AI systems more capable, reliable, and scalable across the entire software development lifecycle.

Key Benefits:

- Increased AI Utility and Automation: By connecting AI to real-world services, MCP transforms AI from a static knowledge base into a dynamic, context-aware agent capable of complex actions, such as updating customer records or running financial calculations.

- Improved Scalability and Maintainability: By eliminating the need for custom integrations, MCP solves the "M x N problem," reducing development overhead, simplifying maintenance, and allowing for easier scaling of AI applications across various tools and models.

- Enhanced Performance and Efficiency: Benchmarking shows that MCP servers can outperform custom integrations, offering higher throughput, reduced memory usage, and lower CPU consumption, which translates to lower operational costs.

- Reduced Hallucinations and Increased Factual Accuracy: LLMs can sometimes generate plausible but incorrect information. MCP directly addresses this by providing a standardized channel for LLMs to access real-time, external data sources, grounding their responses in verifiable facts and significantly reducing "hallucinations".

- Democratization of Tool Development: MCP empowers developers to create and share specialized tool servers, fostering a community-driven ecosystem of connectors for popular services and niche internal systems, lowering the barrier to intelligent automation. This standardization is even leading to the emergence of "Context-as-a-Service," where platforms offer managed MCP servers, simplifying integration complexities for developers.

Pioneering Use Cases Across the Software Development Lifecycle:

- AI-Powered Coding and Automated Code Generation:

- Tools like GitHub Copilot and Replit Ghostwriter now use MCP to pull live context from your entire development environment. This means an AI can safely fetch repository information, perform code searches, and find function references, leading to more accurate code suggestions.

- MCP enables automation of routine dev tasks: AI agents can use Git MCP to summarize changes, pull bug ticket details from issue trackers like Jira, and even orchestrate creating branches, committing code, and submitting pull requests on GitHub.

- Intelligent Documentation and Knowledge Base Integration:

- MCP bridges the gap between code and documentation. AI can connect to API specifications (like OpenAPI) via an MCP server to automate documentation drafting, generating endpoint descriptions and code snippets.

- It enables continuous documentation maintenance by comparing live code/APIs with published docs to flag discrepancies, ensuring documentation stays up-to-date.

- AI assistants can answer natural language questions about internal knowledge bases (wikis, runbooks) by retrieving exact snippets via MCP, preventing "hallucinations".

- Test Case Synthesis and Automated Quality Assurance (QA):

- MCP allows AI to interact with running software and test environments. For instance, an official Playwright MCP server exposes browser control commands, enabling an AI to navigate pages, click buttons, and fill forms in a real browser.

- With real-time feedback (HTML, page title, screenshots), AI can generate accurate end-to-end test cases and executable test scripts from natural language prompts. This helps AI write working tests that would otherwise be impossible without live context.

- AI agents can even run unit tests or linters on a codebase, interpreting failing test outputs to pinpoint bugs, effectively bringing AI into the QA loop.

- CI/CD Pipeline Automation and Release Tasks:

- DevOps engineers are experimenting with AI agents that can manage build and release pipelines by connecting to infrastructure via MCP. An AI agent can detect a new commit, use a Docker MCP server to build an image, then a Kubernetes MCP server to generate and apply deployment manifests.

- This allows AI to orchestrate entire CI/CD pipelines and handle routine deployment and release tasks, such as compiling release notes or automatically notifying teams of deployment status via Slack connectors.

- MCP facilitates DevOps incident response: AI integrated with monitoring tools could detect an alert, run diagnostics via log-analysis and metrics MCP tools, and even execute mitigation scripts, all while keeping humans informed.

Orchestrating Tools Safely: What Developers Should Know

Integrating with MCP opens powerful possibilities, but also comes with responsibilities - security being a top priority.

- Least-Privilege and User Consent: MCP is designed with security in mind. AI models only see what an MCP server explicitly exposes, and actions can be gated behind user approvals. Developers must ensure MCP servers have appropriate authentication and scope, using fine-grained, least-privilege role-based access control (RBAC).

- Transparency and Auditing: The MCP framework provides an audit log of all JSON-RPC calls, recording every tool invocation by the AI. This transparency is crucial for building trust in AI-driven workflows.

- Best Practices: Developers should implement robust authentication (e.g., OAuth 2.1), enforce end-to-end encryption, centralize logs to a SIEM for anomaly detection, and be mindful of risks like prompt injection and tool misuse. Users should only add servers from trusted sources and review permissions, as MCP servers can run arbitrary code.

- For tool providers: Creating an MCP integration is straightforward with available SDKs in multiple languages (Python, Typescript, C#, Java). This means you're making your tool AI-ready in a standardized way, ensuring interoperability with any MCP-compatible AI agent.

The New Foundation of AI Interoperability

The Model Context Protocol is proving to be a game-changer for developer workflows, transforming AI from a passive assistant into an active participant in the software lifecycle. By allowing AI systems to "plug into" and interact with code editors, documentation, test frameworks, CI/CD pipelines, and cloud services, MCP unlocks use cases that were impractical just a short time ago.

The rapid adoption of MCP by major industry players like OpenAI, Anthropic, Microsoft, and Google DeepMind—despite being rivals in other areas—underscores its potential to become a universal standard for AI-tool integration. As Google DeepMind CEO Demis Hassabis put it, MCP is "rapidly becoming an open standard for the AI agentic era".

For developers, this broad adoption means that investing in MCP is largely future-proof. Whether you're integrating AI into your product or enhancing your workflow with a custom AI agent, MCP offers a ready-made highway to do so, making modern software development a more automated, context-rich, and intelligent process than ever before.

References

- The Model Context Protocol (MCP) by Anthropic: Origins, functionality, and impact - Wandb

- What is Model Context Protocol (MCP)? A Guide - Google Cloud

- Model Context Protocol - Wikipedia

- What is Model Context Protocol (MCP)? - IBM

- Model Context Protocol: Introduction - modelcontextprotocol.io

- What Is the Model Context Protocol (MCP) and How It Works - Descope

- MCP: the Universal Connector for Building Smarter, Modular AI Agents - InfoQ

- What's the Difference Between an MCP Server and MCP Client? - Prefactor

- How OpenAI Agent Mode Uses the Model Context Protocol (MCP). by James Fahey

- MCP in Copilot Studio – Microsoft Copilot Blog

- Microsoft Build 2025: The age of AI agents and building the open agenic web

- Using MCP in Zed IDE - Zed Code Editor Documentation

- Let AI Explore Your Site & Write Tests with Playwright MCP - YouTube

- Automating Kubernetes CI/CD with AI + MCP. by Kirill Petropavlov. Medium. May 2025.

- AI Model Context Architecture (MCP) Scaling: Load Balancing, Queuing, and API Governance - by Valdez Ladd

- MCP Security Best Practices: How to Secure Model Context Protocol - ssojet.com

- (PDF) Model Context Protocol Servers: A Novel Paradigm for AI ... ResearchGate

- MCP Code Generation: How to Generate MCP Codes Efficiently. Byteplus

- A Deep Dive Into MCP and the Future of AI Tooling - Andreessen Horowitz

- Guide to the Dev Mode MCP Server – Figma Learn

- MCP ervers for Cursor - Cursor Directory. Cursor

- Modern Test Automation with AI(LLM) and Playwright MCP (Model Context Protocol)

- 6 Top Model Context Protocol Automation Tools (MCP Guide 2025). testguild.com

- Search package and API docs with docs-mcp-server. Reddit

- Model Context Protocol (MCP) real world use cases, adoptions and comparison to functional calling. by Frank Wang

.png)