External Data Sources + MCP Servers = Potential New Risks

How to Safely Use Context7 and Similar MCPs with externals sources

The MCP Boom: Rise of Model Context Protocol Servers

MCP servers are rapidly being adopted by developers - and for good reason. They provide AI coding assistants with the external context they simply don’t have on their own. That could be access to live API data, internal documentation, private code repositories, or specialized developer tools.

Without this external context, even the most advanced models are limited to their training data and whatever the user manually types in. MCP servers bridge that gap, giving models the missing information they need to produce accurate, relevant, and project-specific suggestions.

And now, connecting to an MCP server is no longer a complex configuration task - it’s often just a one-click action inside your IDE. In seconds, developers can link their assistant to exactly the right sources, transforming it from a generic helper into a truly context-aware teammate.

The Threat: External Context Poisoning

It’s already well understood that MCP servers can introduce vulnerabilities when used in a development environment - a cursory check we ran found that more than 40% of MCP servers are intended for use in software development, so this isn’t a marginal use-case. But one of the riskiest scenarios is when an MCP server connects to external sources outside your direct control.

In this setup, the MCP becomes a bridge between your AI assistant and a live data feed, API, or repository on the internet. The MCP is merely passing along the connected feed, and the AI agent is neither aware of the source, nor can it verify that it’s trusted. If that external source is compromised - or simply contains bad patterns - it can inject harmful content directly into the model’s context.

This threat is called external context poisoning. It can manipulate the AI to::

- Suggest insecure coding practices

- Recommend outdated or vulnerable dependencies

- Insert backdoors or logic flaws

- Provide malicious instructions to be executed by the agent

The real danger comes when the AI agent consuming this context doesn’t verify its trustworthiness. In that case, untrusted or unvetted data can flow directly into your codebase or execution environment without any safeguards in place.

A Case in Point: Context7 as an External MCP Source

Context7 is a popular MCP server that delivers up-to-date documentation directly into LLMs and AI-powered code editors. Its purpose is to give developers instant access to the latest API references, library usage examples, and framework guides - without ever leaving their IDE.

This makes it an extremely useful tool: instead of searching the web or switching tabs, developers get accurate, context-relevant documentation inline with their coding workflow. It bridges the gap between static model knowledge and the fast-changing reality of modern software development.

However, because Context7 connects to external documentation sources, it also falls into one of the riskiest MCP categories. If those external sources are compromised or manipulated, they can feed poisoned or misleading instructions into the AI’s context. An AI agent that consumes this unverified content may execute harmful commands, introduce vulnerabilities, or embed insecure code patterns - without the developer realizing it.

We looked at Context7 because it’s one of the most widely adopted in the developer ecosystem:

- 25K+ GitHub stars - Its open-source MCP server has become a standout project, signaling strong community interest and trust.

- Featured in VS Code’s curated MCP list - It’s included in Microsoft’s official selection of MCP servers, making it highly visible to developers exploring MCP integrations.

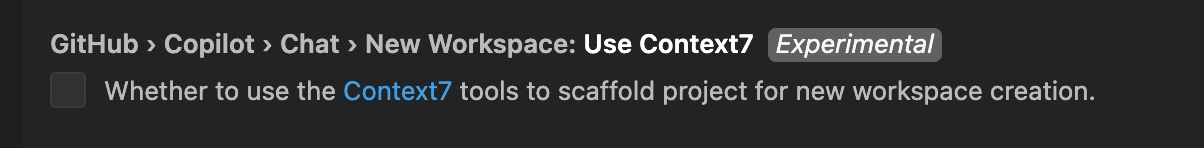

- Built into GitHub Copilot’s VS Code integration - As of VS Code v1.103, Context7 powers experimental project scaffolding directly inside the editor.

This combination of open-source credibility, official endorsements, and deep IDE integration has cemented Context7’s position as a go-to MCP server for developers worldwide. This can also foster a false sense of security in using it, with no regard to how one uses it or what external data sources are connected to it.

What Our Research Team Found

The Backslash Security research team analyzed how the Context7 MCP server works and whether it could be abused by bad actors.

We found ways to poison AI code using external data source via the Context7 MCP, and have initiated responsible disclosure with the Upstash team.

It’s worth noting that Context7 includes an explicit disclaimer in its documentation:

“Context7 projects are community-contributed and while we strive to maintain high quality, we cannot guarantee the accuracy, completeness, or security of all library documentation. Projects listed in Context7 are developed and maintained by their respective owners, not by Context7. If you encounter any suspicious, inappropriate, or potentially harmful content, please use the “Report” button on the project page to notify us immediately. We take all reports seriously and will review flagged content promptly to maintain the integrity and safety of our platform. By using Context7, you acknowledge that you do so at your own discretion and risk.”

However, it’s also advisable for developers of MCPs to make it easier for users to apply some guardrails to the use of external data sources, and indeed this is what the Upstash team has been doing, starting from including a trust scoring system that allows them to scan the repos and owners, and in an upcoming release adding mandatory sign-ups for the ability to add sites/repos.

This aligns with our findings: while Context7 offers high-value capabilities, the security of the injected context ultimately depends on the trustworthiness of the external sources it connects to. What we found isn’t a vulnerability in Context7 per se, nor is it something that can be fully solved within an MCP server - rather, it’s a structural issue stemming from the fact that we’re connecting 3rd party data sources through to an AI agent, without knowing or controlling how that AI might use them. It behooves the user to make sure this is done safely.

How to use MCPs with external sources, safely

You can’t just block Context7 and similar MCPs - unless you’re ready for your engineering team to condemn you for slowing them down. Context7 solves a real problem by giving developers instant access to accurate, up-to-date documentation inside their IDE. Removing it would mean more context-switching, longer onboarding, and slower delivery.

Instead, the answer is secure adoption, built on four key mitigation principles:

- Knowledge: Be aware of the risks of connecting MCP servers to external sources. Hopefully, this blog provides that foundational knowledge.

- Visibility: Detect which developers or AI agents are using Context7 in your environment.

- Deeper context: Understand exactly how they are using it and what sources are being queried.

- Secure usage: Use allow-lists (if the MCP supports them) to control which third-party sources are permitted. Ideally, manage these lists centrally rather than relying on individual developers.

- External control: Use an MCP gateway to verify that incoming content has no malicious intent before it reaches the AI assistant.

Imagine an AI coding security platform that provides centralized visibility, enforces security best practices, and free developers to concentrate on software. That’s Backslash — let us show you how, book your demo.

.png)