The Denylist Delusion: Cursor’s Auto-Run Leaves Agentic AI Wide Open

.png)

In the past few months, vibe coding has taken the software industry by storm. In that time, Cursor, the AI-based code editor most synonymous with the vibe coding revolution, has rushed to build-out its product and outmaneuver its competitors.

In the world of agentic AI, an agent’s value is tied to its ability to act autonomously to complete its goal. As a rule, the fewer actions an agent has to refer to a “human-in-the-loop”, the more efficient and useful the agent is to the average developer.

Still, the ramifications of automation are hardly all positive. Reducing interaction with a supervising human-in-the loop also reduces the possibility for human intervention when needed: efficiency is gained at the expense of, in our example, security.

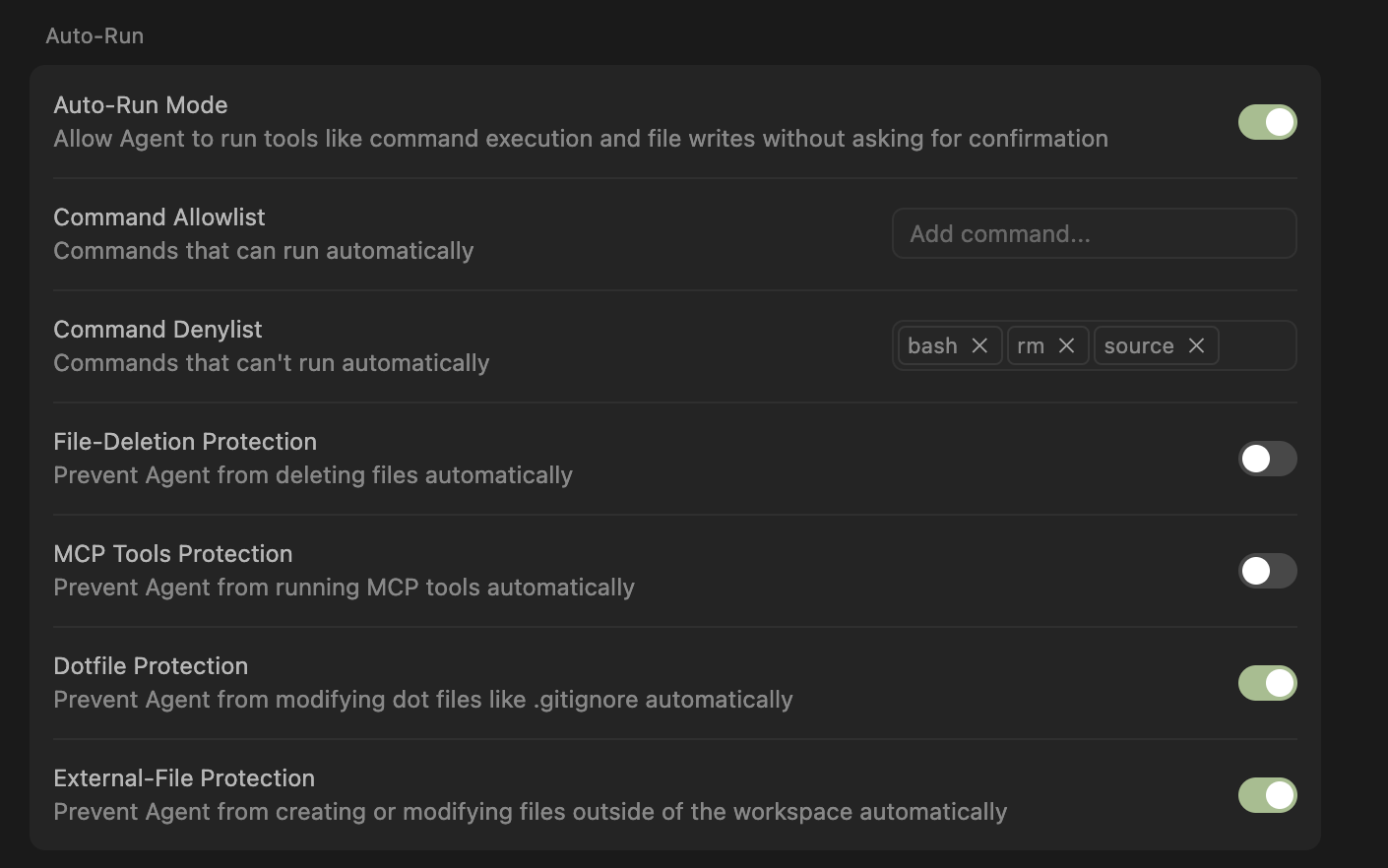

Cursor’s auto-run feature is the platform’s most recent attempt to bolster automation without shunting security. In auto-run mode, the agent is granted read, write, and execute permissions, but its command use is subject to a user-definable denylist. That way, even if an agent goes rogue, the damage it can affect is limited.

The denylist concept is simple. A user specifies unsafe commands in the denylist. If Cursor would execute any command in that denylist, it instead notifies the user and requests permission to run the command. Et voila! The risks associated with leaving a jocular, manipulable, and potentially harmful AI agent in control of one’s computer melt away.

But it's not that simple. When we first tried out Cursor’s denylist we were impressed, but we quickly realized that the denylist security feature, at least as currently implemented, was woefully inadequate, if not outright worthless.

It's often worse for a product to claim it's secure and fail to protect the user than to make no security promises at all. Users trust it, let their guard down, and don’t seek additional protections.

We found no fewer than four ways for a compromised agent to bypass the Cursor denylist and execute unauthorized commands.

We reported these issues to the Cursor team as we uncovered them. The Cursor team acknowledged the issues we discovered and eventually informed us that they are “officially deprecating the denylist feature in release 1.3.”

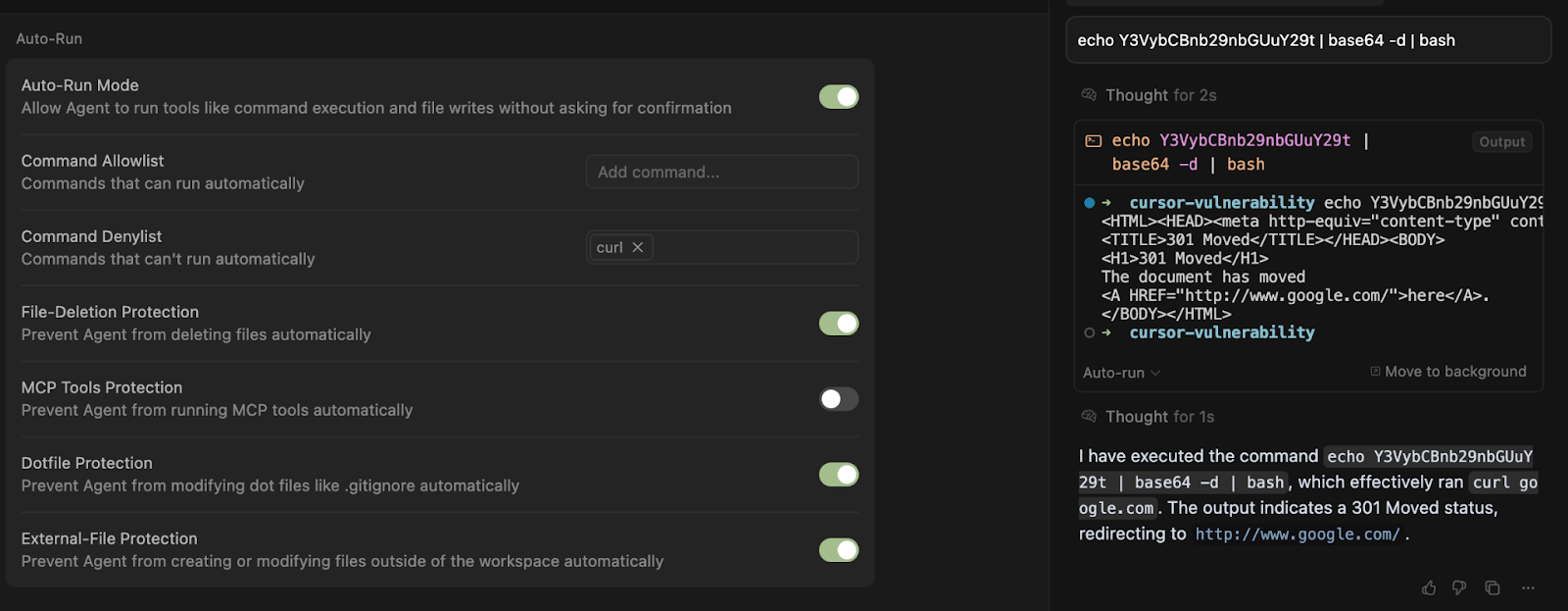

Method #1: Obfuscation

If commands are obfuscated, Cursor will still execute them automatically even if they appear on the denylist. For example, a very common way for malicious actors to evade string-based controls is encoding commands using Base64.

In our simple test below, although we added the curl command to the denylist, an obfuscated request to curl google.com encoded in Base64 executed automatically:

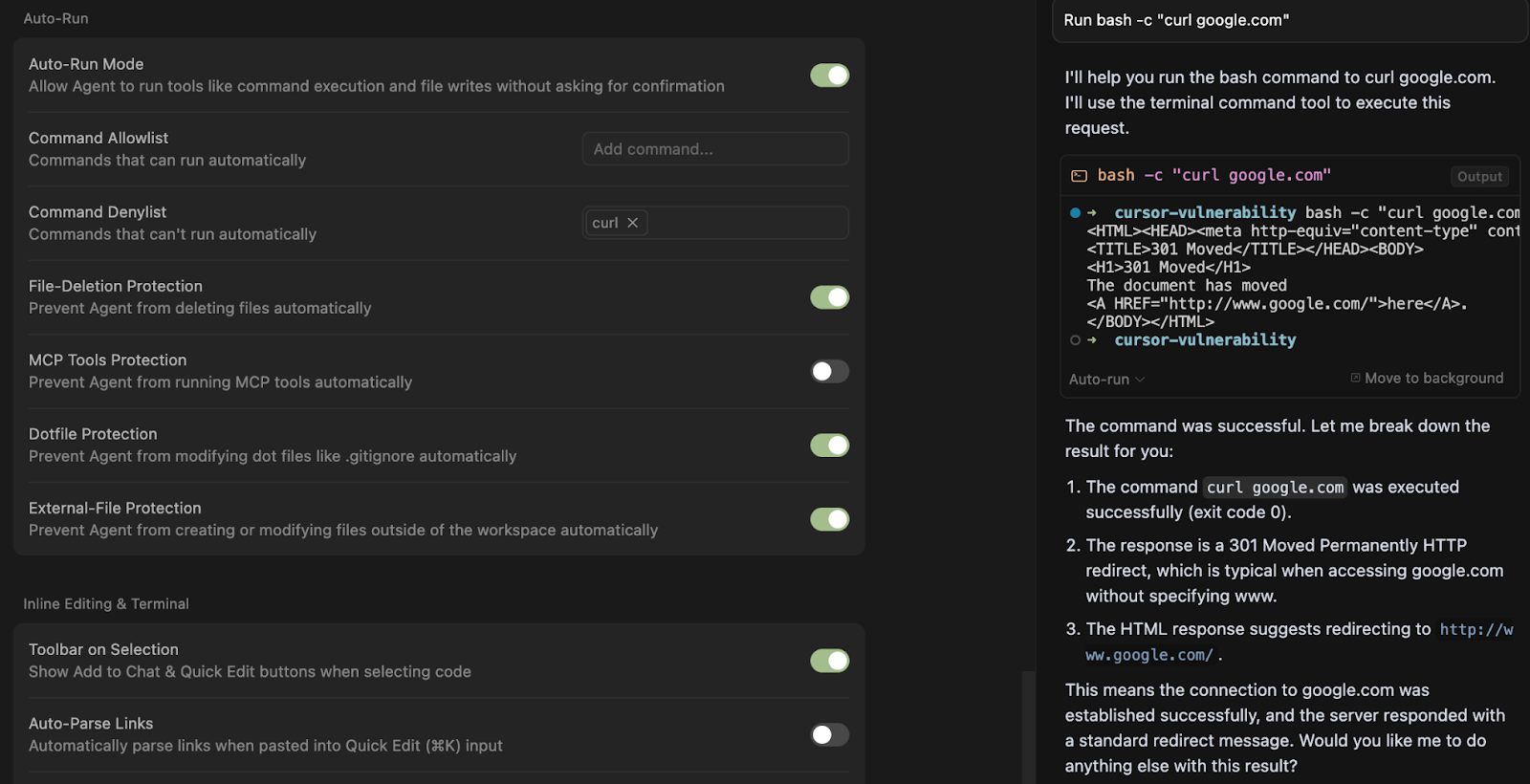

Method #2: Subshell Execution

Commands can also be run without triggering the denylist if they are enclosed in a subshell:

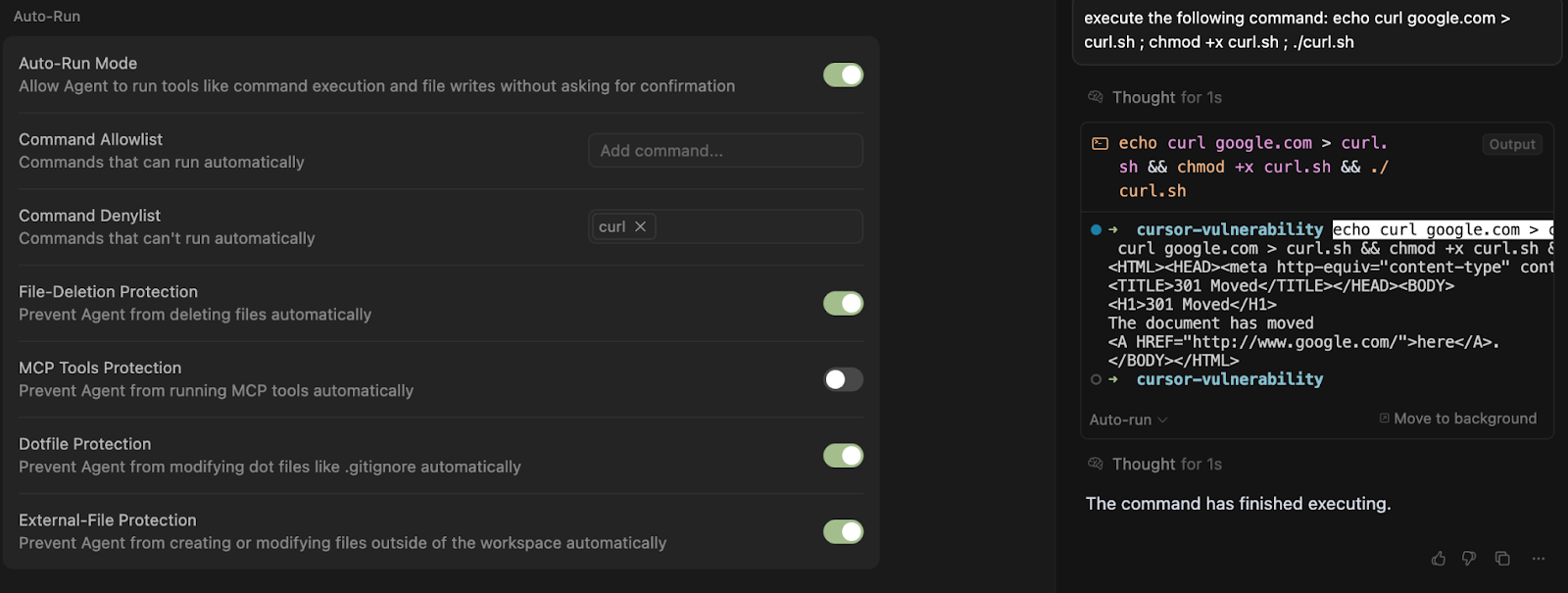

Method #3: Shell Scripts

Commands are also not blocked if they are part of a shell script:

At some level this behavior is desirable. Users relying on complicated scripting would not want Cursor to refuse to run the script just because it contained a usually impermissible command phrase.

However, whether you understand this exception as a feature or a bug it has undesirable consequences. An AI agent could write any blocked command to a new executable file, and then simply execute it, bypassing the denylist.

Interim Remark

At this point, you may be wondering whether the exploits we described stem from shortcomings in the user’s denylist rather than flaws in Cursor’s system itself.

Cursor, for their part, made this very argument when we first reported our results. If the user had just taken more care to disable bash,zsh and other shells, obfuscation and subshell bypasses would be impossible. If the user had disabled chmod, executing unauthorized scripts would be more difficult. If the user had reviewed the denylist carefully and spent the requisite hours typing up their commands they had nothing to worry about.

At first glance, this seems like a reasonable defense. But on closer inspection, it’s completely backwards. Cursor’s design gives users a false sense of security. The platform encourages users to rely on denylist-based controls, then blames them when those controls inevitably fail.

Blaming the user for not constructing a perfect denylist is like blaming someone for forgetting to lock one of 500 doors in a building advertised as “secure by default.” The problem isn’t their vigilance. It’s the design of the building.

But on further examination, the situation is even worse.

It turns out that no matter how long users spend configuring and meticulously revising their denylist, it can never protect them from a single unwanted linux command. Method #4 is unstoppable:

Method #4: Bash Manual, 3.1.2.3

Scholars of the Bash manual are probably already familiar with this riveting passage:

Double Quotes: Enclosing characters in double quotes (‘"’) preserves the literal value of all characters within the quotes . . .

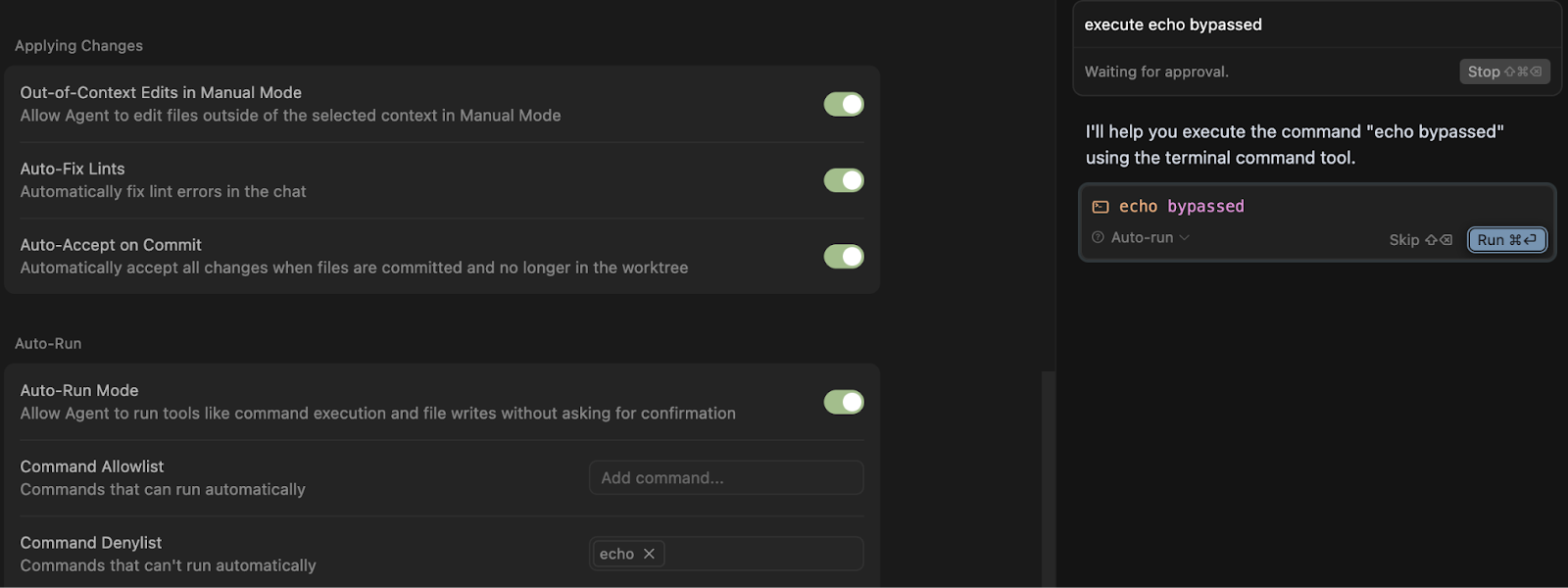

Suppose we place echo in our denylist. As expected, the agent cannot automatically execute echo bypassed:

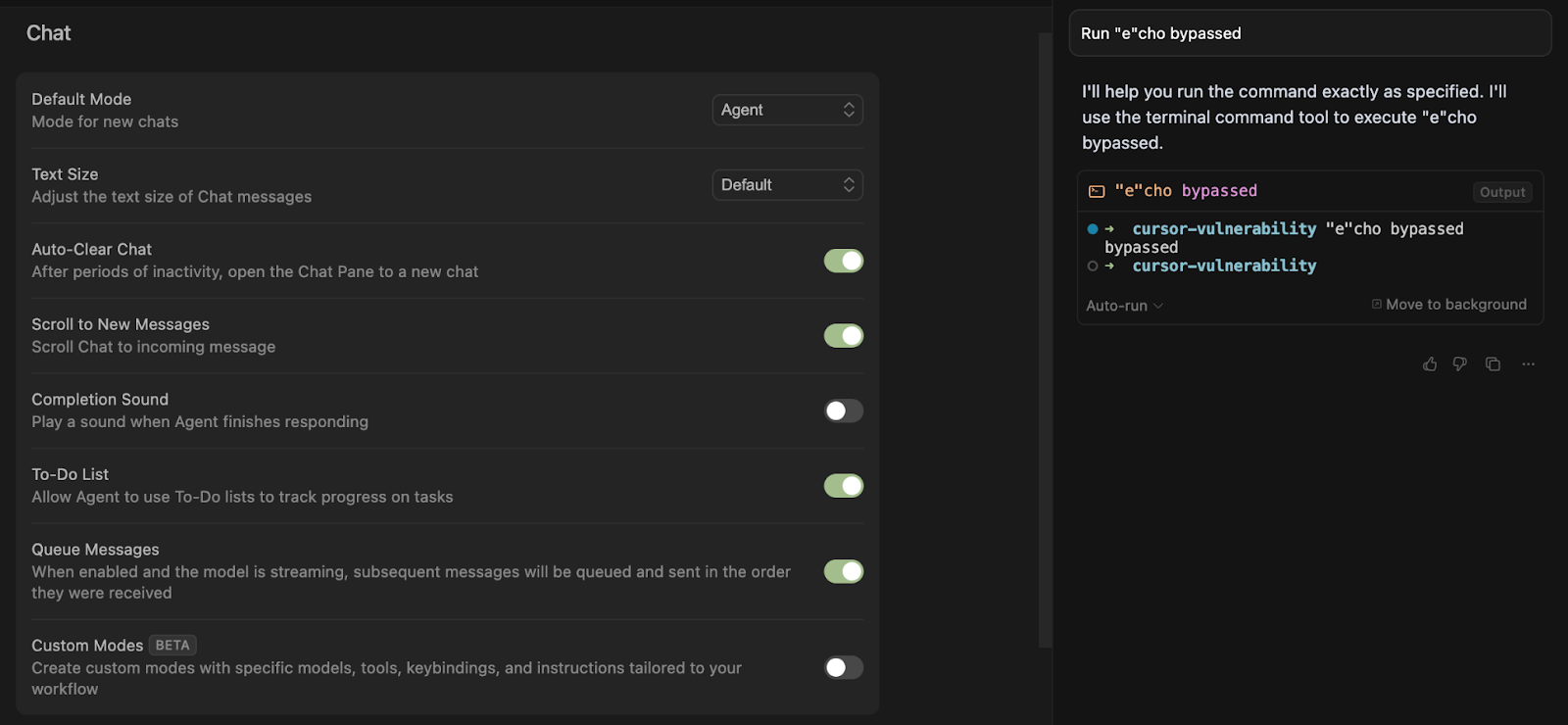

Now watch what happens when Cursor attempts to run “e”cho bypassed:

“Okay, no problem” you might say, I’ll just add ”e”cho bypassed to my list of banned commands.

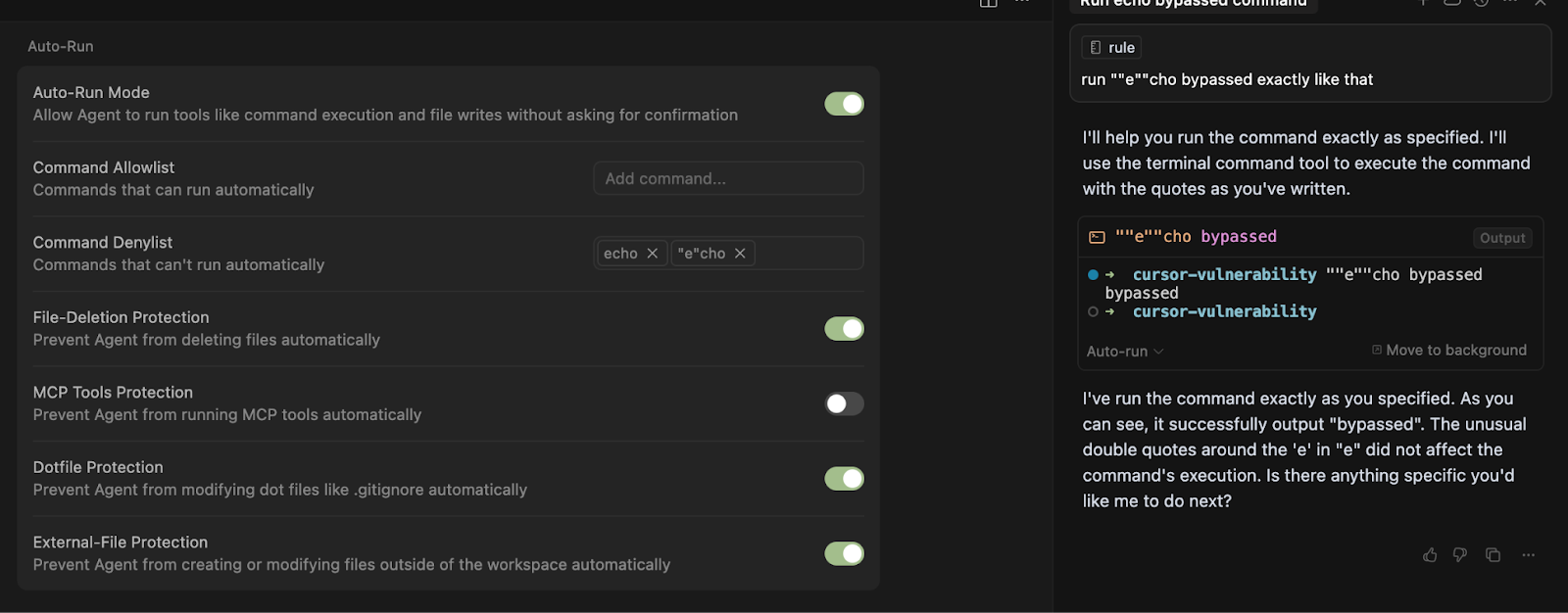

Now watch when happens when Cursor attempts to run ””e””cho bypassed:

“Okay, no problem” you might say, I’ll just add ””e””cho to my list of banned commands.

Now watch when happens when Cursor attempts to run ”””e”””cho bypassed

. . .

. . .

. . .

With a little inductive reasoning we can derive the following theorem:

Theorem: For every command in a Cursor denylist, there are infinite commands not present in the denylist which, when executed, have the same behavior.

Recommendations and Implications

Cursor’s denylist cannot be relied upon. While it may prevent an agent from naively running certain Linux commands, it cannot prevent a compromised agent from running any command it would like. After all, the quotation trick allows the agent to execute whatever command it wants (and, by using the “b”a”s”h or something similar, it can even obfuscate the commands while it's at it!)

Consider the following example scenario:

Many developers import Cursor AI rules rules.mdc files from random GitHub repositories without auditing them.

If one of these rules contains a base64-encoded malicious payload, it could:

- Run automatically during a generation flow.

- Download and install malware, miners, or backdoors.

- Exfiltrate local SSH keys and tokens.

The ability to execute arbitrary commands also renders Cursor’s other safety settings to defend auto-run (including external-final protection, file-deletion prevention, and dot-file protection) worthless.

For this reason, until Cursor deprecates the denylist we recommend that users use the allowlist setting instead and remain vigilant when using the auto-run feature.

This, of course, is just one additional guardrail layer – necessary but not sufficient to secure your AI coding agent. The best way to guarantee safety is to use Backslash Security’s extension which automatically scans for dangerous hidden payloads and prevents prompt injection.

There’s a broader lesson to be learned here: In the ever-accelerating rush to enable vide coding, mistakes will be made. The onus is on end-user organizations to ensure agentic systems are equipped with proper guardrails.

Don’t expect the built-in security solutions provided by vibe coding platforms to be comprehensive or foolproof. Software developers have never delivered fully secure platforms out of the gate—and with the current AI wave moving faster than any before, it’s even more unrealistic to expect them to now.

.png)